Deep Dive into Machine Learning: Decision Tree and Random Forest

Note: While learning the topic, I prepared the note for my easy reference by referring various sources. Hopefully, this will be helpful to others too to understand the algorithm in the simple manner.

What is a Decision Tree:

A decision tree is a supervised machine learning algorithm used for both classification and regression tasks. It works by creating a tree-like model of decisions and their possible consequences.

Structure of the Decision Tree:

Root node: The topmost node in the tree, representing the starting point of the decision-making process.

Internal Nodes: Nodes that split for a value of a certain attribute

Edges: The connections between nodes, representing the possible outcomes of a decision. These are also called branches.

Leaves: These are the terminal nodes that predict outcomes.

How a Decision Tree Works:

A decision tree works by recursively splitting the data into subsets based on the values of its features, aiming to create pure subsets where all data points belong to the same class. Here’s a breakdown of how it works:

1. Root Node: The algorithm starts with the entire dataset at the root node.

2. Attribute Selection: It selects the best attribute (feature) to split the data based on a criterion like information gain.

3. Splitting: The data is split into subsets based on the chosen attribute’s values, creating branches from the root node.

4. Recursion: Above two steps are repeated for each subset until a stopping condition is met:

- Pure Node: All data points in a subset belong to the same class.

- No More Attributes: There are no more features to split on.

- Maximum Depth: The tree reaches a predefined maximum depth.

5. Prediction: To make a prediction for a new data point, the algorithm traverses the tree from the root node, following the branches based on the feature values until it reaches a leaf node. The class label of the leaf node is the predicted class for the data point.

When to use Decision Tree:

Decision tree can be used in multiple scenarios.

· Classification:

When we need to categorize data into distinct classes.

· Regression:

When we need to predict a continuous numerical value.

Example:

Let’s say, there are multiple applicants for loan. Based on various features of the loan applicants, , the creditworthiness of loan applicants can be performed using decision tree to identify which applicants loan need to be approved and which applicants loan need not be approved.

When not to use Decision Tree:

· Decision trees is very effective with high-dimensional data. This can lead to overfitting.

· Decision trees can be biased towards the majority class in imbalanced datasets.

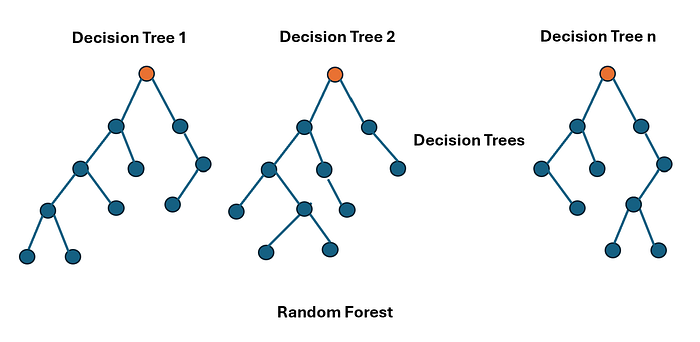

What is Random Forest:

A Random Forest is a supervised machine learning algorithm that uses an ensemble of decision trees to make predictions. It’s a powerful algorithm known for its accuracy and robustness, making it applicable to both classification and regression problems.

How Random Forest Works:

- Bootstrapping:

The algorithm creates multiple random subsets of the original dataset (with replacement). Each subset is used to build a decision tree.

- Feature Randomness:

At each node of the decision tree, a random subset of features is considered for splitting. This reduces correlation among trees and improves the model’s ability to generalize.

- Building Decision Trees:

Each decision tree is built independently using the randomly selected data and features.

- Aggregation:

For classification, the final prediction is made by taking the majority vote of all the trees in the forest. For regression, the final prediction is the average of the predictions from all trees.

When to Use Random Forest:

- Classification and Regression:

Random Forest can be used for both classification and regression tasks.

- High Accuracy:

Random Forest is known for its high accuracy.

- Robust to Overfitting:

Random Forest is less prone to overfitting than individual decision trees due to its ensemble nature.

When to Not Use Random Forest:

Random Forest includes high complex computation. If you need extremely fast real-time predictions, Random Forest might not be the best choice.

Steps to implement Decision Tree:

import libraries:

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

%matplotlib inline

Read the dataframe

df = pd.read_csv(‘kyphosis.csv’)

Make a sns.pairplot to know the high level relationships among columns

sns.pairplot(df,hue=’Kyphosis’)

Now its time to split our data into a training set and a testing set!

from sklearn.model_selection import train_test_split

X = df.drop(‘Kyphosis’,axis=1)

y = df[‘Kyphosis’]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

Implement Decision Tree

from sklearn.tree import DecisionTreeClassifier

Initialize Decision Tree Instance:

dtree = DecisionTreeClassifier()

Fit Decision Tree to the features.

dtree.fit(X_train, y_train)

Predict the Decision Tree Outcome

pred = dtree.predict(X_test)

Verify the classification report and confusion matrix

from sklearn.metrics import classification_report, confusion_matrix

print(confusion_matrix(pred,y_test))

print(classification_report(pred,y_test))

Steps to implement Decision Tree:

Import the random forest classifier

from sklearn.ensemble import RandomForestClassifier

Create an instance of RandomForestClassifier by providing n_estimators

rfc = RandomForestClassifier(n_estimators=200)

Train the random forest model

rfc.fit(X_train, y_train)

Predict the model with the test data

rfc_pred = rfc.predict(X_test)

Print the confusion matrix and classification report

print(confusion_matrix(y_test,rfc_pred ))

print(classification_report(y_test,rfc_pred ))

Compare the performance of the decision tree and random forest.

Conclusion: For a large dataset, generally random forest provides better performance than the decision tree. The computation complexity of the random forest is more than the decision tree. Random forest needs more resource. During the real time random forest might not be a good fit and when precision is of utmost important, random forest is a better suit. Based on the need, the appropriate algorithm needs to be chosen.

The GitHub link for working notebook of decision tree and random forest:

https://github.com/SwarnaPatel/Python_MachineLearning_DecisionTreeAndRandomForest