Deep Dive Into Machine Learning : K Nearest Neighbors

What is KNN:

K-nearest neighbors (KNN) is a supervised machine learning technique that is used to handle both classification and regression tasks.

An object is classified by a plurality vote of its neighbors. The object is being assigned to the class most common among its k nearest neighbors. k is a positive integer.

When to use KNN:

KNN is most useful when labeled data is too expensive or impossible to obtain, and it can achieve high accuracy in a wide variety of prediction-type problems.

Example:

Let’s say, there are features of length and height of bottle gourds and raw bananas. A new vegetable data came with length and height feature. Based on the vegetable’s feature, we need to determine whether it is a bottle gourd or a raw banana. There are many classes consisting of the points (k). Based on the k value, the new point will be determined. Let’s say, k=3. If the new point is similar to the points in a class, it belongs to that class. If k =6, then the data point can belong to another class based on the similar points.

When not to use KNN:

Implementing KNN on a large dataset is not a good decision because not only it has to store a large amount of data but it also needs to keep calculating and sorting all the values.

Steps to implement KNN:

import libraries:

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

%matplotlib inline

Read the dataframe

df = pd.read_csv(‘data.csv’)

Make a sns.pairplot to know the high level relationships among columns

sns.pairplot(df)

Standardize the Variables

To standardize the variable, we need to Import StandardScaler from Scikit learn

from sklearn.preprocessing import StandardScaler

Create a StandardScaler() object called scaler

scaler = StandardScaler()

Fit scaler to the features.

scaler.fit(df.drop(‘TARGET CLASS’, axis = 1))

Use the .transform() method to transform the features to a scaled version.

scaled_features = scaler.transform(df.drop(‘TARGET CLASS’, axis = 1))

Convert the scaled features to a dataframe

df_feat = pd.DataFrame(scaled_features,columns=df.columns[:-1])

Use train_test_split to split your data into a training set and a testing set.

Train the model

from sklearn.model_selection import train_test_split

X = df_feat

y = df[‘TARGET CLASS’]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=101)

Using KNN (K Nearest Neighbors)

Import KNeighborsClassifier from scikit learn.

from sklearn.neighbors import KNeighborsClassifier

Create a KNN model instance with n_neighbors=1

knn = KNeighborsClassifier(n_neighbors=1)

Fit this KNN model to the training data.

knn.fit(X_train,y_train)

Predictions and Evaluations

Let’s evaluate our KNN model!

Use the predict method to predict values using your KNN model and X_test.

pred = knn.predict(X_test)

Create a confusion matrix and classification report.

from sklearn.metrics import classification_report, confusion_matrix

print(confusion_matrix(y_test,pred))

print(classification_report(y_test,pred))

Note the precision percentage

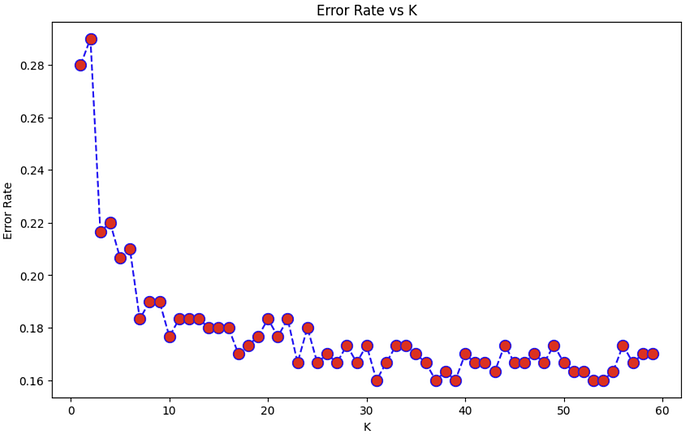

Choosing a K Value

The choice of k can significantly impact the performance of the model. A smaller k can lead to overfitting, while a larger k can lead to underfitting. We need to use the near right K value.

Let’s go ahead and use the elbow method to pick a good K Value!

Create a for loop that trains various KNN models with different k values, then keep track of the error_rate for each of these models with a list.

error_rate = []

for i in range(1,60):

knn = KNeighborsClassifier(n_neighbors = i)

knn.fit(X_train, y_train)

pred_i = knn.predict(X_test)

error_rate.append(np.mean(pred_i != y_test))

Graph:

Now create the following plot using the information from your for loop.

plt.figure(figsize=(10,6))

plt.plot(range(1,60),error_rate,color=’blue’,linestyle=’ — ‘,marker=’o’,markerfacecolor=’red’,markersize=10)

plt.title(‘Error Rate vs K’)

plt.xlabel(‘K’)

plt.ylabel(‘Error Rate’)

Retrain with new K Value

Retrain your model with the best K value (up to you to decide what you want) and re-do the classification report and the confusion matrix.

knn = KNeighborsClassifier(n_neighbors = 30)

knn.fit(X_train,y_train)

pred = knn.predict(X_test)

Verify that the precision is improved.

print(confusion_matrix(y_test,pred))

print(‘\n’)

print(classification_report(y_test,pred))

Working Jupyter Notebook:

https://github.com/SwarnaPatel/Python_MachineLearning_KNN